YouTube magic that brings views, likes and suibscribers

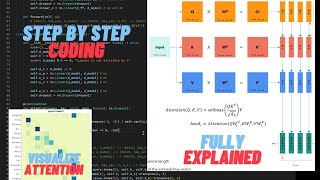

Attention is all you need (Transformer) - Model explanation (including math) Inference and Training

A complete explanation of all the layers of a Transformer Model: MultiHead SelfAttention, Positional Encoding, including all the matrix multiplications and a complete description of the training and inference process.

Paper: Attention is all you need https://arxiv.org/abs/1706.03762

Slides PDF: https://github.com/hkproj/transformer...

Chapters

00:00 Intro

01:10 RNN and their problems

08:04 Transformer Model

09:02 Maths background and notations

12:20 Encoder (overview)

12:31 Input Embeddings

15:04 Positional Encoding

20:08 Single Head SelfAttention

28:30 MultiHead Attention

35:39 Query, Key, Value

37:55 Layer Normalization

40:13 Decoder (overview)

42:24 Masked MultiHead Attention

44:59 Training

52:09 Inference

Recommended

![BERT explained: Training, Inference, BERT vs GPT/LLamA, Fine tuning, [CLS] token](https://i.ytimg.com/vi/90mGPxR2GgY/mqdefault.jpg)