Lecture 10 | Recurrent Neural Networks

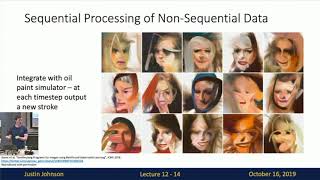

In Lecture 10 we discuss the use of recurrent neural networks for modeling sequence data. We show how recurrent neural networks can be used for language modeling and image captioning, and how soft spatial attention can be incorporated into image captioning models. We discuss different architectures for recurrent neural networks, including Long Short Term Memory (LSTM) and Gated Recurrent Units (GRU).

Keywords: Recurrent neural networks, RNN, language modeling, image captioning, soft attention, LSTM, GRU

Slides: http://cs231n.stanford.edu/slides/201...

Convolutional Neural Networks for Visual Recognition

Instructors:

FeiFei Li: http://vision.stanford.edu/feifeili/

Justin Johnson: http://cs.stanford.edu/people/jcjohns/

Serena Yeung: http://ai.stanford.edu/~syyeung/

Computer Vision has become ubiquitous in our society, with applications in search, image understanding, apps, mapping, medicine, drones, and selfdriving cars. Core to many of these applications are visual recognition tasks such as image classification, localization and detection. Recent developments in neural network (aka “deep learning”) approaches have greatly advanced the performance of these stateoftheart visual recognition systems. This lecture collection is a deep dive into details of the deep learning architectures with a focus on learning endtoend models for these tasks, particularly image classification. From this lecture collection, students will learn to implement, train and debug their own neural networks and gain a detailed understanding of cuttingedge research in computer vision.

Website:

http://cs231n.stanford.edu/

For additional learning opportunities please visit:

http://online.stanford.edu/